Recently, a research paper titled “Exclusion of Thought: Mitigating Cognitive Load in Large Language Models for Enhanced Reasoning in Multiple-Choice Tasks”, authored by a research team from Guizhou University’s Engineering Research Center of the Ministry of Education on Text Computing & Cognitive Intelligence, was accepted by ACL 2025 (The 63rd Annual Meeting of the Association for Computational Linguistics), one of the most prestigious international conferences in the field of natural language processing (NLP) and a Class-A conference recognized by the China Computer Federation (CCF). This marks the first time a paper from any university or research institution in Guizhou Province has been accepted by ACL, and the first international top-tier conference paper from the province in the field of Large Language Models (LLMs), representing a significant breakthrough for GZU in the areas of cognitive intelligence and natural language understanding. The first author of the paper is Fu Qihang, a master’s student admitted in 2023 at the College of Computer Science and Technology, and the corresponding authors are Professor Qin Yongbin and Professor Huang Ruizhang.

With the rapid advancement of large language model technologies represented by GPT-4 and DeepSeek, LLMs have emerged as a central research focus in the field of artificial intelligence. Powered by massive-scale parameters and powerful language modeling capabilities, LLMs have demonstrated impressive performance across a range of NLP tasks such as text generation, question answering, and machine translation. However, when tackling multiple-response questions (i.e., those with more than one correct answer), LLMs are often misled by distractor options. These incorrect choices impose a form of “cognitive load” on the model, leading to answers that may appear plausible but are ultimately incorrect.

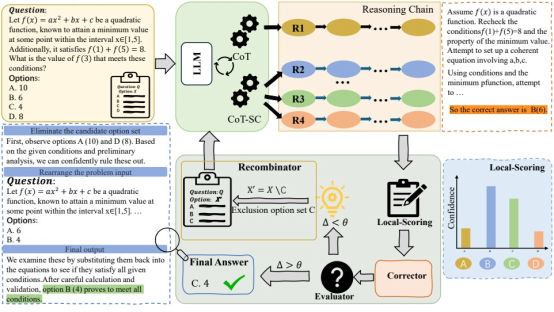

To address this issue, the paper introduces a novel reasoning method for LLMs inspired by human thinking processes, termed “Exclusion of Thought” (EoT). The EoT method guides LLMs to actively exclude incorrect options, effectively reducing external interference and mitigating cognitive load during LLM reasoning. At the same time, EoT records the model’s exclusion process, enhancing transparency and interpretability of the reasoning, which offers a promising solution to the “black-box problem” in AI. As a plug-and-play strategy, EoT can be integrated with any existing prompt engineering techniques. The study conducted extensive experiments on six multiple-choice datasets and five popular LLMs with this method. The results showed consistent performance improvements, particularly in complex reasoning

tasks where traditional methods underperform.

The paper’s acceptance into ACL’s main conference demonstrates GZU’s growing research strength in artificial intelligence, particularly in cognitive intelligence and language reasoning. It also highlights the university’s achievements in strengthening its computer science discipline and building high-performing research teams in artificial intelligence. The university’s research team in text computing and cognitive intelligence was established in 2008. After more than a decade of development, it has evolved into a distinctive research group engaged in text mining and analysis, NLP, knowledge fusion, cognitive intelligence, medical imaging, and data intelligence. In 2022, it was approved as an Engineering Research Center of the Ministry of Education on Text Computing and Cognitive Intelligence. Its research currently focuses on interdisciplinary applications of AI, including internet text analysis, cognitive analysis, legal-domain LLMs, smart courts, intelligent justice systems, medical imaging analysis, and precision diagnostics.

Editor: Zhang Chan

Chief Editor: Li Xufeng

Senior Editor: Ding Long

Translator: Jia Haibo